Value creation in the age of AI

October 11, 2023

Sam Altman, CEO of OpenAI

We're standing at the crossroads of a defining moment in the history of technology. A decade back, deep learning concepts proved to work. A few months ago, it was generative AI’s turn. The purpose of the following blog post is to map out the golden opportunities, identify the pioneers, and guide you to position yourself in the AI gold rush.

What won’t work

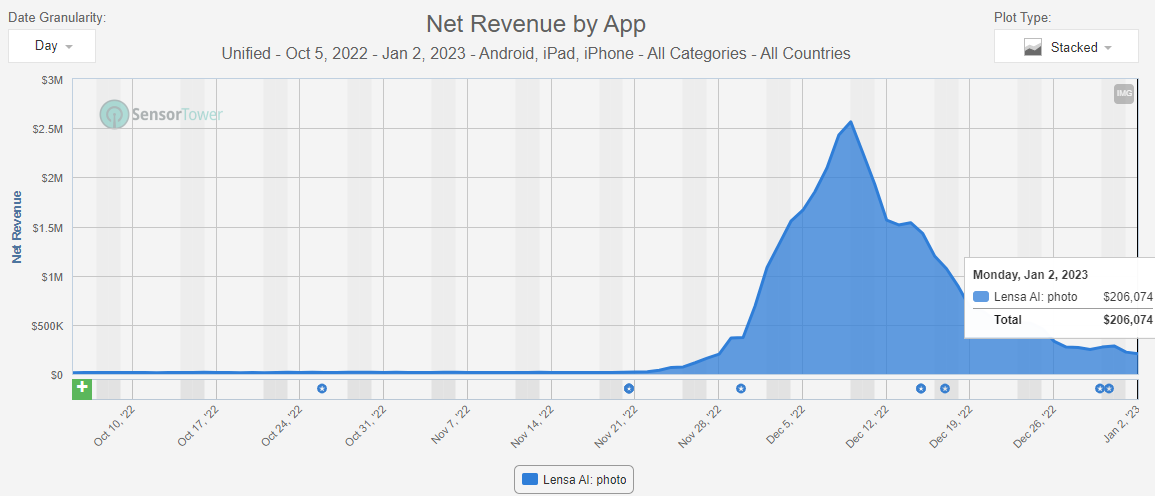

This is the revenue of Lensa AI. An app that generated avatars from pictures of users. To build an app like Lensa, there’s no need to be a deep learning researcher. There’s no need to know anything about AI actually. You just need to use AI models already developed and perfected by other teams at OpenAI, Bard, Mistral, etc,…

The problem with this approach is that there’s no substantial value creation. The defensibility of this type of product is very thin. It can gain some initial traction by being one of the first movers, like Lensa AI, but there’s nothing stopping anyone from instantly becoming a strong competitor. Anyone who can code an app and be descent in marketing can have an equivalent value prop.

When the Iphone was first created, one of the early successes was an app that allowed you to switch your flashlight on and off. When you build such an app, it is very easy to compete with you, you have no moat. The goal is not to build a flashlight app, but to build the next Instagram or Uber. These are apps built on top of smartphones but that have managed to create substantial value and defensibility on top.

The same goes for AI. Building a tool that wraps GPT around a web app and sells it to a niche is a short term move. That might work for a while, but won’t result in a lasting impact.

Value in specialization

One way to create defensibility and value when building in AI is to specialize your models to a specific use case. The idea behind this approach is to make a model that significatively outperforms generalist models (GPT4, Bard, Llava, …) on a specific task.

While the AI lawyer or the AI doctor wasn’t created yet, there are some early examples of what a specialized AI can look like. Character.ai recently raised $150M in a round led by A16Z to let users speak to virtual characters. Users can now speak to Elon Musk, Albert Einstein or Napoleon Bonaparte thanks to an AI that continuously improves itself to satisfy users.

Speaking to Elon Musk through Character.ai provides a very different experience (better outputs) than asking ChatGPT to answer like it was Elon Musk. The result is a consumer product that is almost as sticky as the likes of Facebook and Snapchat in their early days : The average Character.ai user spends around two hours daily on the platform. This is mainly what justified the massive $150M investment.

UX play

The other way to create value is through UX (not UI). A lot of what we call GPT wrappers (Lensa AI for example) are just websites or apps that use existing AI models without adding any value. The user experience is substantially the same.

This can be counterintuitive for technical profiles, but value can indeed be created through UX and design. And to be honest these are my favorite types of startups. The value and defensibility is in reinventing the way a user interacts with a product. Superhuman redesigned the email experience and made a fantastic business out of it. Cron reimagined the way we interact with our digital calendar before being acquired by Notion (that had itself its share of success by redesigning note taking apps).

The challenge here is to do the same for AI startups. One interesting experimentation, link here, was initiated by Google and aims to reinvent the UX for a specific niche (AI-powered tools for rappers, writers and wordsmiths).

In short, a way to build a strong AI business, without being a non defensible GPT wrapper, is to find ways to bring substantial value through user experience.

Infrastructure play

In a gold rush, those who make the most money are selling shovels. In other words, a powerful way to benefit from the AI wave is to build the developer tools and infrastructure necessary to build AI applications. The underlying assumption is that the total value created by the AI applications market will be important enough to feed the developer tools and infrastructure startups that live under.

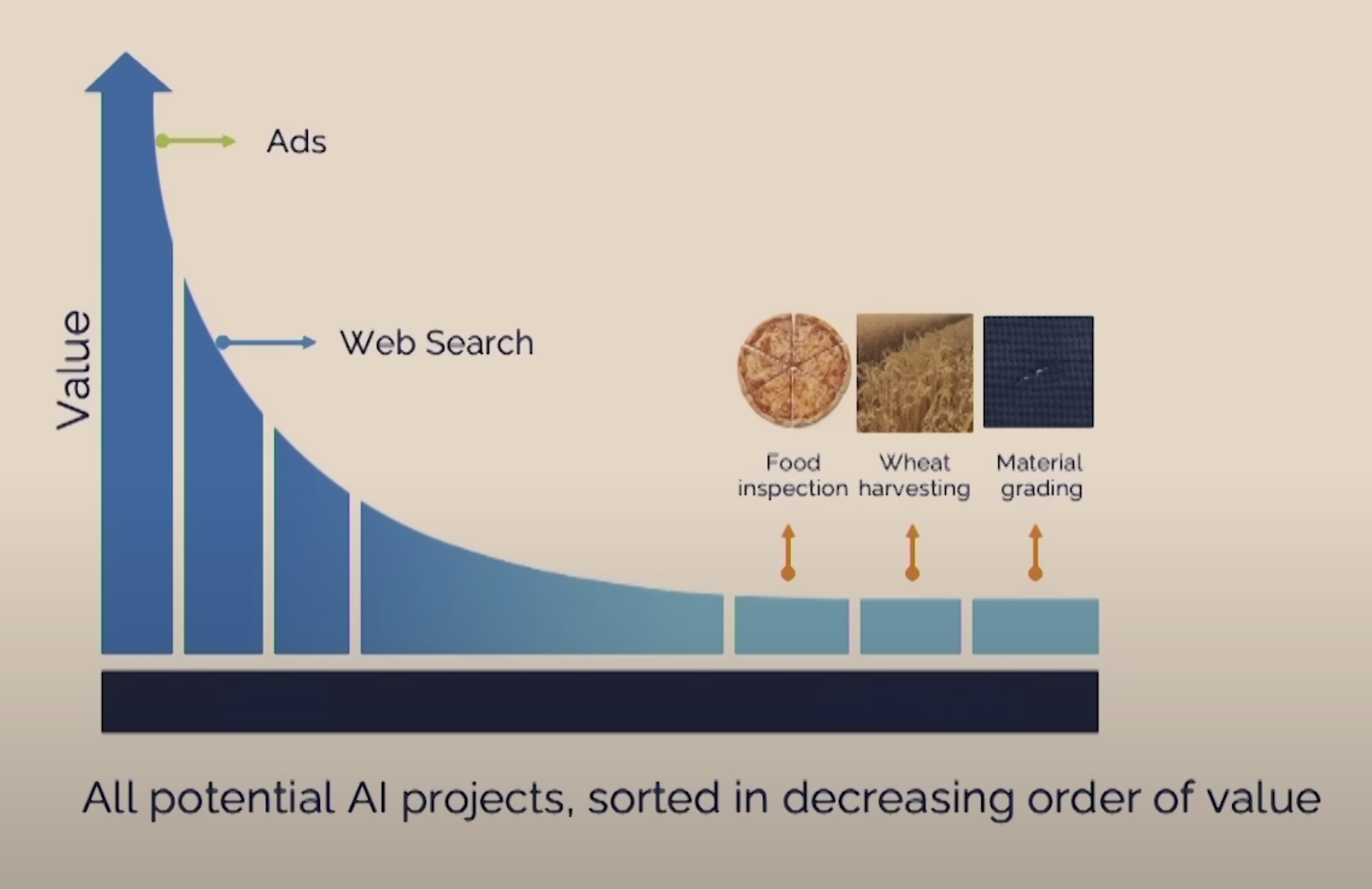

Beyond developer tools, the pre-LLM (Large Language Models) landscape meant that using AI involved : collecting data, labelling it, training your models, and then deploy them. This process took months to years and could cost millions of dollars. The consequence is that the only opportunities worth tackling were huge (eg: Ads or web search), leaving behind a lot of small use cases that weren’t economically viable to address* :

In the LLM era, a lot of these small opportunities now cost a fraction of the cost to address. The technical barrier to entry is extremely low and the real opportunity is in the tools that will help users address their own specific use-cases in a personalized and convenient way. With such tools, a head of marketing can now automate the writing of a newsletter, a CTO can perform security reviews automatically and a sales rep can, in an automated way, reach an unprecedented amount of personalization in each email. Taken separately, each one of these opportunities has a very low barrier to entry and no moat, but the tools that will allow anyone to build their own use cases will.

If you liked what you read, you can follow me on Linkedin !

* : The image was taken from an excellent talk by Andrew Ng (Former head of Google Brain and cofounder of Coursera).